-

|

I am wondering why the code for training only the last layer works, because it only passes the code: for param in model.parameters():

param.requires_grad = False

...

if args.trainable_layers == "last_layer":

pass

...I have set it more specifically, with the same results: for param in model.parameters():

param.requires_grad = False

...

if args.trainable_layers == "last_layer":

# Get the last layer

last_layer = list(model.children())[-1]

# Make the last layer trainable

for param in last_layer.parameters():

param.requires_grad = True

...What do you also think about adding test for the two last layers + two last transformer blocks, like from your article about finetuning LLMs: |

Beta Was this translation helpful? Give feedback.

Replies: 2 comments 13 replies

-

Oh that's simply because we first make all layers untrainable, and then we replace the last layer with |

Beta Was this translation helpful? Give feedback.

-

|

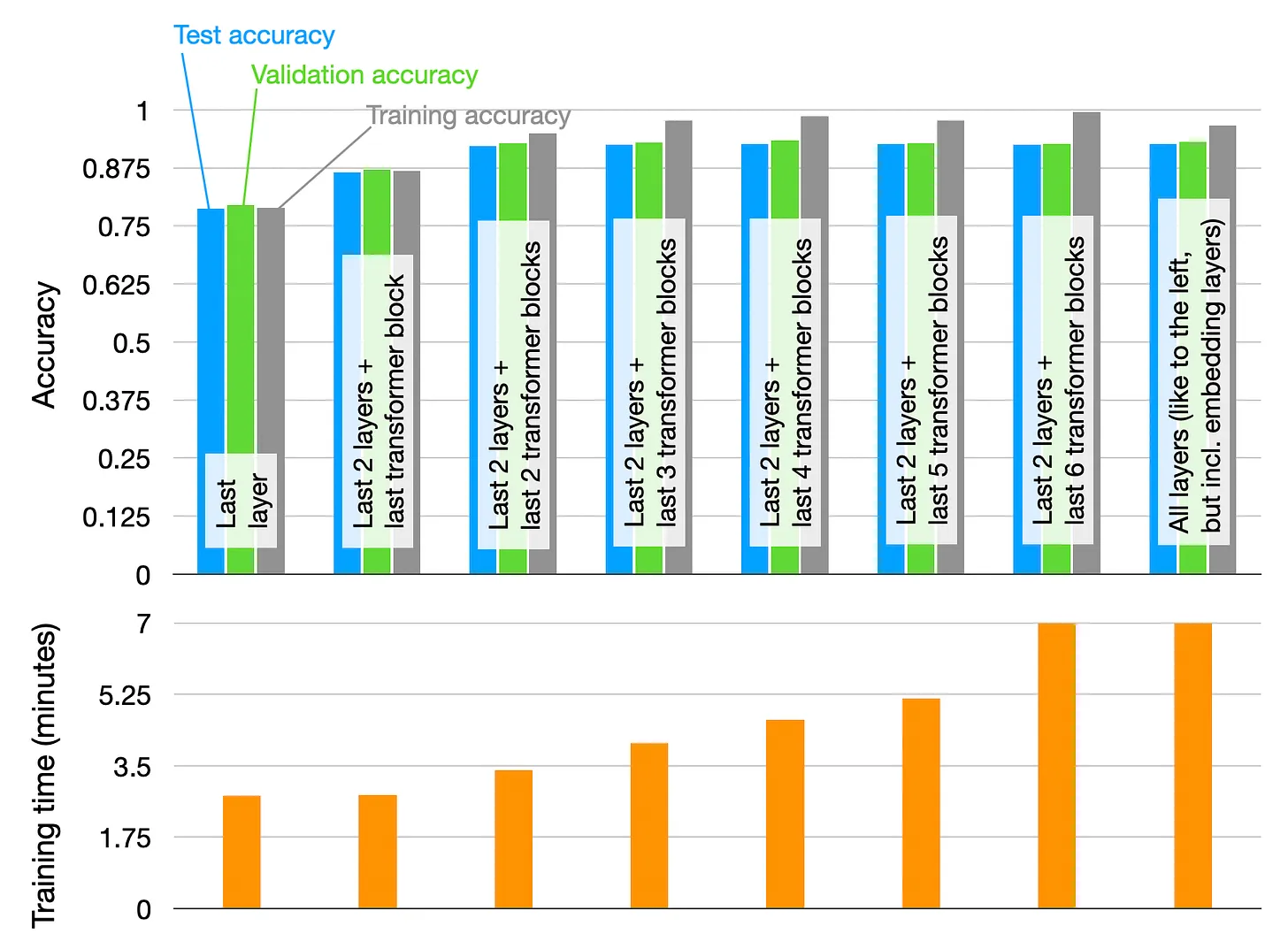

That was a good suggestion, the performance of the last two blocks is quite good! |

Beta Was this translation helpful? Give feedback.

Oh that's simply because we first make all layers untrainable, and then we replace the last layer with

nn.Linear, andnn.Linearis trainable by default. And that's becausenn.Linearusesnn.Parameter, which hasrequires_grad=Trueby default.